Chinese Militarism Surges, D.C. Urges Taiwan To FIGHT

Here’s a scenario that should make every American pay attention. China is rehearsing an invasion of Taiwan. Not in theory. Not in war games at a think tank. The People’s Liberation Army is conducting large-scale drills simulating encirclement and blockade operations around the island. Chinese aircraft are crossing the median line of the Taiwan Strait […]

Dems Threaten Their Own People – “Do As We Say Or Else”

John Kennedy has a gift that most senators would trade their committee assignments for — the ability to say exactly what everyone’s thinking, but funnier. On Wednesday, the Louisiana senator looked at the Democratic Party’s latest standoff over DHS funding and delivered a diagnosis so sharp it should come with a co-pay. “The game room […]

Phone Companies Admit Spying On Conservatives

Picture this. You’re a sitting member of Congress. You’re going about your business — making calls, sending texts, doing the job voters sent you to do. And somewhere in a windowless DOJ office, Jack Smith’s team is vacuuming up your phone records like a Roomba with a warrant and zero shame. That’s not a hypothetical. […]

Tulsi Gabbard Unleashes On Democrat Liars

If you want to know who the deep state fears, watch who they attack. They’re not going after bureaucrats who play ball. They’re not targeting intelligence officials who protect the old guard. They’re coming for Tulsi Gabbard — and they’re coming hard. This week, Senator Mark Warner went on NBC and accused the Director of […]

ICE Now USELESS – Ridiculous New Restrictions Coming

You’ve got to hand it to Chuck Schumer and Hakeem Jeffries. When they want to sneak something past the American public, they don’t use the front door. They crawl through the basement window at 2 a.m. wearing socks on their shoes. While the country was glued to Epstein documents and kidnapping headlines, the two most […]

Watch: Trump Spars With “Worst Reporter” Over Stupid Question

Kaitlan Collins had her moment planned. The Epstein files had just dropped. Three million pages. Names everywhere. The biggest document release in the history of the case. Trump’s name wasn’t connected to the island. Wasn’t on the flight logs. Wasn’t in witness testimony linking him to criminal activity. Collins walked into the press availability anyway […]

Bernie Sanders Exposed For Bizarre Sex Magick

You think you know Bernie Sanders. The democratic socialist. The finger-wagging senator from Vermont. The man who honeymooned in the Soviet Union and almost won the Democratic nomination twice. You don’t know the half of it. A new book reveals that young Bernie Sanders built a homemade device designed to harness sexual energy and produce […]

Joe Rogan Exposes Obama – Video Proves His Sleazy Actions

“That sounds so Republican.” Joe Rogan couldn’t believe what he was hearing. But it wasn’t a Republican speaking. It was Barack Obama. In 2010. Defending deportation. The same policies Democrats now call “Nazi tactics” were mainstream Democratic positions just 15 years ago. The receipts are on tape. And Rogan is making sure millions of people […]

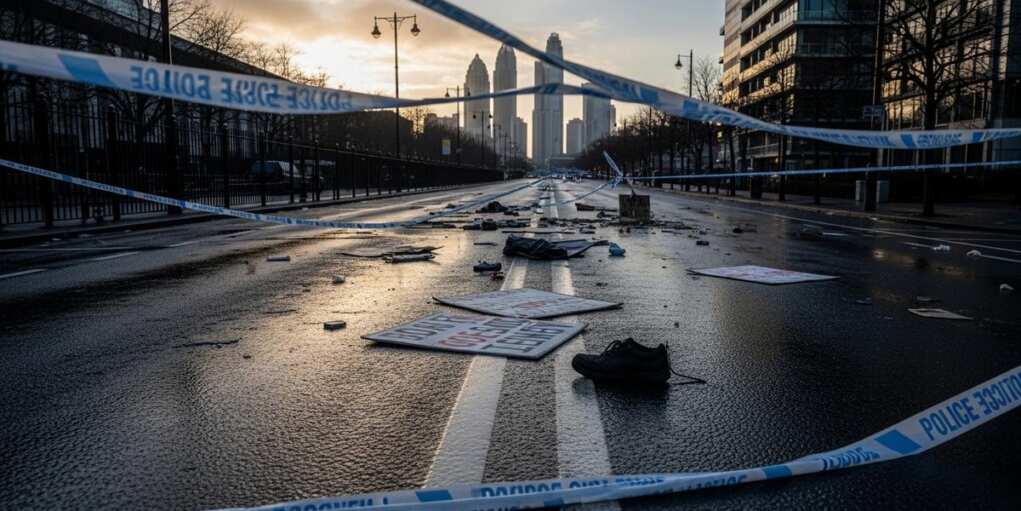

Breaking Bad Celeb Calls For Violent Insurrection

“This is time for a revolution.” That’s Giancarlo Esposito. You know him from Breaking Bad, The Mandalorian, countless other roles. Successful actor. Wealthy. Famous. Living the most privileged existence possible in human history. And he wants a revolution. Not just any revolution. One where people die. Lots of people. Maybe 50 million of them. Here’s […]

DeSantis Exposes Gavin Newsom’s Treasonous Plot At Davos

Gavin Newsom has a state burning — literally and figuratively. Homelessness exploding. Crime rampant. Population hemorrhaging. Wildfires destroying communities. So where was California’s governor this week? Davos. Frolicking with globalist elites. Taking photos with Alex Soros. And urging foreign leaders to rally against the President of the United States. Ron DeSantis has had enough. The […]

New Car Shut Off Tech – RINOs And Dems Turn Orwellian

Imagine driving down the highway when your car suddenly decides you’re impaired. Not a cop. Not a breathalyzer. Your car. It monitors your driving, determines you’re unfit, and shuts itself off. Maybe you swerved to avoid a pothole. Maybe you’re tired. Maybe the algorithm just glitched. Doesn’t matter. You’re stranded. No appeal. No due process. […]

Trump Tells Globalists Why He Really Wants Greenland

Donald Trump walked into Davos this week and did something no American president has done in decades: he told the global elite exactly what he wants and why he wants it. No apology tour. No diplomatic tap-dancing. Just Trump, a microphone, and a message for Denmark. Hand over Greenland. We’re building the Golden Dome. The […]

What Happens To Iran If Trump’s Assassinated?

Iran’s Supreme Leader Ayatollah Khamenei has been posting threats against Trump on social media, including images depicting the president in a coffin. Standard fare from a regime that’s been threatening American leaders for decades. Trump’s response? He’s left instructions. “They shouldn’t be doing it, but I’ve left notification. Anything ever happens, the whole country is […]

Trump Starts New “Board Of Peace” – And Guess Who He Invited

Donald Trump was asked about inviting Vladimir Putin to his new Board of Peace. His response? “Yeah, he’s been invited.” He said this at the College Football National Championship Game. While Indiana was beating Miami. Because that’s how Trump does diplomacy now—casually confirming geopolitical bombshells between plays. The Kremlin confirmed they received the invitation and […]

WH Destroys Liberal Reporter Over ICE Accusations

Karoline Leavitt just called a reporter a “left-wing hack” to his face after he claimed an ICE agent “recklessly” killed a woman who ran him over with her car. The Hill’s Niall Stanage asked about ICE operations and whether agents were acting appropriately given that “some people have died.” Leavitt asked him directly: “Why was […]

Most Popular

Most Popular